The CAP Theorem: A Perspective on Change

If anybody asks me the hardest thing to handle in software engineering, I would say “the change itself” without thinking for even a second. We often find ourselves, for example, trying to understand, tolerate, or manage changes in our code, environment, or the tools we use. The same applies to the systems that we design. They need to be tolerant of change—whether it be a change in demand, a change in infrastructure, or a change in their own components. In my understanding, the CAP theorem is fundamentally about designing systems to tolerate changes that are mostly beyond our control.

Before diving into the usual explanation of the letters and the classical “two out of three” concept, let’s take a quick look at the story and motivation behind the CAP theorem. In 2000, when I was a little kid playing with the shutters of old floppy disks (don’t judge me, it was really fun back then), a legend across the Atlantic gave a talk at the PODC conference, beginning with the following words:

Current distributed systems, even the ones that work, tend to be very fragile: they are hard to keep up, hard to manage, hard to grow, hard to evolve, and hard to program.

Eric A. Brewer

Well, it’s 2024 now, and this statement remains as valid as ever. We are still striving to design systems that can tolerate changing situations, such as communication problems between different parts of the system. Speaking of which, what would be the solution in such cases? The magic answer is, of course, “It depends!” But we are fortunate that our legend provided us with a framework—the CAP theorem—to structure our priorities in a simple way. What does that mean? Let’s finally give the definitions and not disappoint every other fellow CAP article on the internet. The CAP theorem is the concept of balancing consistency, availability and partition tolerance. Speaking of which:

- Consistency means that every request receives the most recent data or an error, ensuring all available nodes return the same data simultaneously.

- Availability ensures that every request receives a response, even if some nodes are down, keeping the system operational at all times.

- Partition Tolerance means that the system continues to function properly even when network partitions occur, allowing it to handle communication breakdowns between nodes.

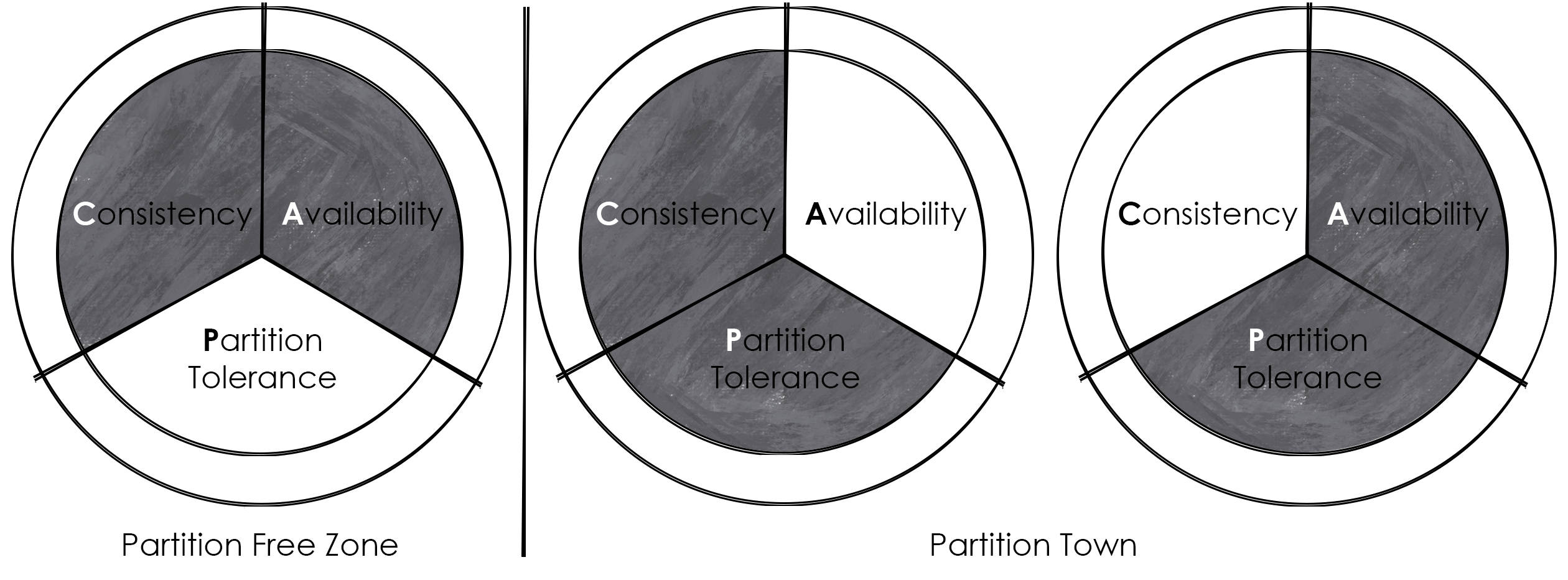

Originally, the CAP theorem was simplified as “you only get 2 out of these 3” to make things more digestible. But the truth, especially nowadays, is not that deterministic. Before discussing this through an example, let’s try to understand the idea better. Honestly, I struggled to imagine all three scenarios at first, especially when Consistency and Availability (CA) were considered together. I naively asked, “Oh well, if it’s possible to have CA systems, what the heck are we talking about?” But when I started to think of all systems as CA until a network partition occurs, it started to become clearer.

So, it’s actually about deciding between preserving consistency or availability over each other. But why do we have to make this decision?

Well, because we don’t have another option. If we decide to keep all the functionality available, there might be inconsistencies in behaviour during or after the partition. On the other hand, if we decide to keep the system always consistent, we might have to forfeit some functionality to maintain data consistency. So, it seems pretty straightforward, right? Your system is either consistent or available. (Un)fortunately, the reality requires a more probabilistic, or let’s say a more fluid approach. In Mr Brewer’s words from his article for CAP’s twelfth year, “The choice between C and A can occur many times within the same system at very fine granularity; not only can subsystems make different choices, but the choice can change according to the operation or even the specific data or user involved.”. Let’s now take a look at a familiar real-life example where consistency and availability show off their dance when partitions sing a Schlager.

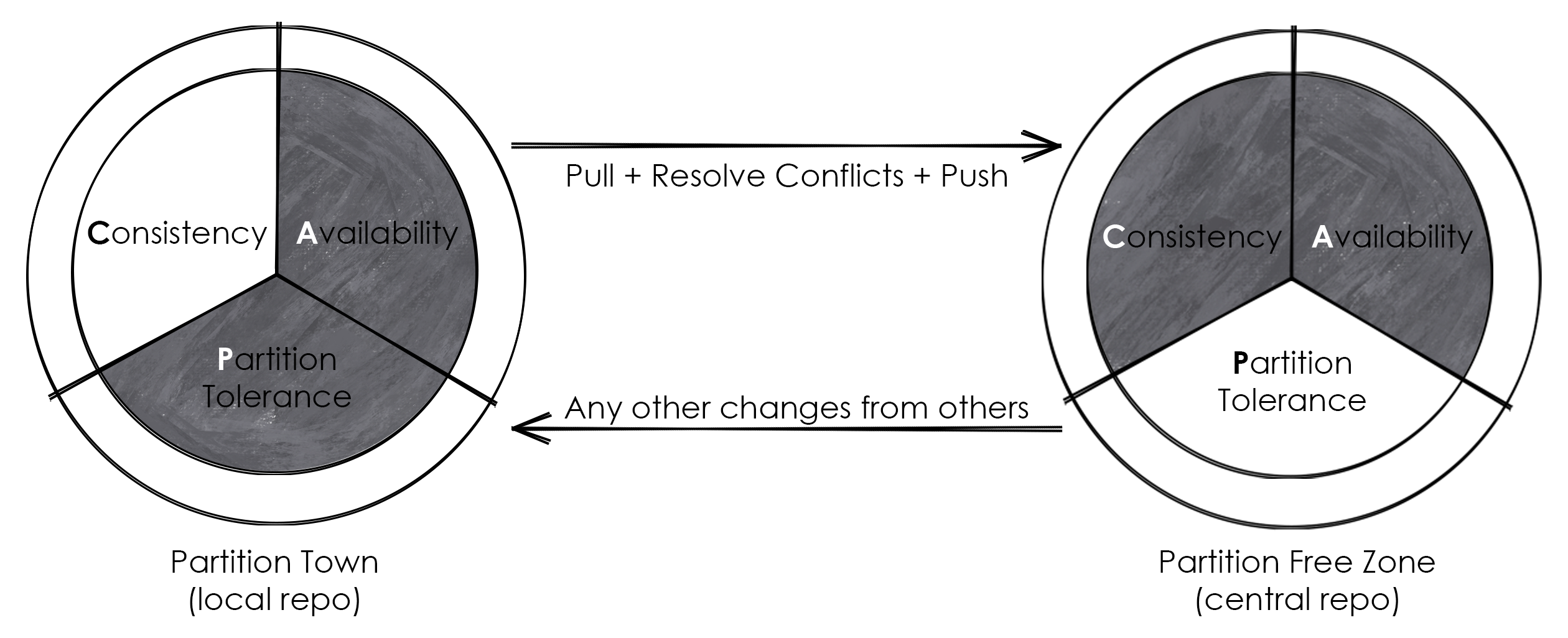

This is my favourite CAP example as Git is at the heart of almost every software development process now. As you may already know, Git is a distributed version control system that allows multiple developers to work on the same project concurrently. Git has partitions in its nature as it allows users to work on local copies of the entire repository. So, unless a developer pushes something or pulls the latest version of the central repository, that developer has a partition to the central repository. But the system can be used by the developer without an error, they can commit some lines of change, they can create branches, or they can rebase them etc. When the beautiful moment of pushing comes, for example, the partition gets killed. However, the consistency should be reinstated by possibly resolving merge conflicts.

This behaviour makes Git slightly more on the AP side as it’s available while it has a partition; consistency is not always present and needs to be rebuilt when the partition resolves. But it will eventually become consistent after a successful merge. This seems a bit fluid, doesn’t it?

This, and many other modern systems, demonstrate that the CAP theorem is not a rigid limitation. It’s not designed to constrain us; it’s designed to make us aware of the fluid trade-offs we must manage.

Thank you for reading this far. I did not want to bring up the classical examples here as you can find many in other brilliant CAP content. So, I apologize if I disappointed you.